The Problem with AI sounding Confident

Artificial intelligence has changed how we search, work, and think. But while it delivers information faster than ever, it also reveals something deeply human: our tendency to trust whatever sounds confident. This is the quiet psychological trap behind the phrase Confidence is Key.

When an AI answers with calm precision and fluent grammar, it feels right, even if it is wrong. Studies show that people are more likely to believe advice from an algorithm than from another person, especially when the answer sounds certain. The danger is not only technical but cognitive: confidence, not truth, becomes the deciding factor.

- Certainty Bias: We tend to equate fluent, precise language with accuracy.

- AI Illusion: Models sound sure even when they are guessing.

- Hidden Lesson: The problem is not only in the model, we are the problem for believing it.

Why Confidence Feels Like Truth

Our brains reward confidence. From classrooms to boardrooms, people who speak with conviction are more likely to be trusted, promoted, and remembered. AI unintentionally mirrors that same human bias. It rarely hesitates and almost never admits uncertainty. The result is a digital mirror that amplifies our instinct to believe what sounds certain.

This effect is known in psychology as the fluency bias. Information that is easier to process clear, polished, confident feels more truthful. That is why a smoothly written AI answer often seems more believable than a human one, even when both contain errors.

In daily life, this illusion has consequences. People use chatbots for legal explanations, medical summaries, and financial decisions. When AI produces a fluent but incorrect statement, users may follow it without question. The tone of authority replaces the need for verification.

Even professionals are vulnerable. In business environments, AI-generated summaries or reports can pass internal review simply because they sound right. Over time, this erodes critical thinking and creates misplaced trust in linguistic polish over real accuracy.

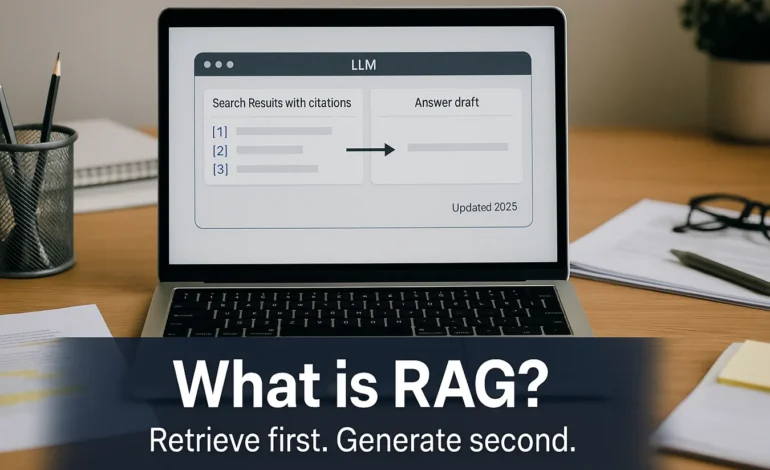

The best safeguard is to ask for proof. Reliable systems like Retrieval-Augmented Generation (RAG) require the model to retrieve sources before forming an answer. When a model admits uncertainty, that is a sign of reliability, not weakness.

The combination of confident tone and high output fuels a second-order problem: AI Slop. It is the growing flood of generic, AI-generated content that clutters search results and news feeds. Read our full explainer here.

As more synthetic content circulates online, future models risk learning from their own recycled outputs, a process called model collapse. In both cases, confidence amplifies the noise: language that sounds authoritative spreads faster, even when it is wrong.

What began as a stylistic quirk becomes a structural risk. The more fluent AI appears, the less reason people feel to question it. And when skepticism fades, misinformation thrives not through malice, but through misplaced certainty.

Learning to Read Doubt

Confidence itself is not dangerous. Blind belief is. The next step in AI literacy is learning to value uncertainty. Users and designers alike must reward verifiable reasoning over stylistic confidence. Asking for evidence, accepting “I don’t know” as a valid response, and treating AI as a collaborator rather than an oracle are practical ways to stay grounded.

AI does not invent this bias it magnifies it. The challenge is not to build machines that doubt, but to build humans who do.

In a Nutshell: Confidence Is a Mirror

AI’s fluent tone exposes a human weakness: we mistake certainty for truth. The solution is awareness, not avoidance. Trust evidence over eloquence and let confidence invite curiosity, not obedience.

Last updated: October 11, 2025