What is a Model Collapse in the field of AI?

Model collapse is the most serious, hidden threat facing the long-term future of generative Artificial Intelligence. This concept describes a dangerous, self-referential cycle where AI models begin to degrade in performance, intelligence, and originality over time because of flaws in their training data.

As the internet is increasingly flooded with synthetic content (AI-generated text and images), future models run a high risk of being trained on data that was created by their predecessors. This internal pollution causes each new generation of AI to become less intelligent, more repetitive, and ultimately, less reliable than the one before it.

- Definition: AI models degrading over generations due to being trained on synthetic (AI-generated) data.

- Outcome: Output becomes repetitive, formulaic, and loses connection to real-world data and human insight.

- Core Analogy: Like making photocopies of a photocopy; the quality degrades each time.

- Solution Focus: Strict data filtering, verifiable human input, and the use of hybrid systems (Neuro-Symbolic, RAG).

The Problem: Why AI Becomes Less Intelligent

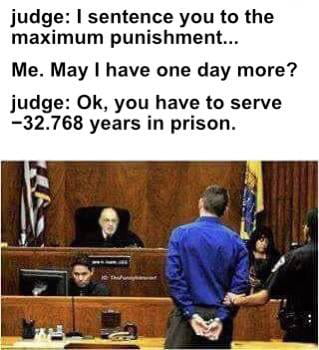

Model collapse is not a theoretical problem; it’s a statistical inevitability if the input data is not carefully curated. The phenomenon is driven by two critical mechanisms: the Feedback Loop of Repetition and the Loss of Tail Data.

When an LLM generates text, that text — if uploaded to the public web (forums, blogs, social media) — enters the vast dataset used to train the next generation of models. The new AI, therefore, learns from its own prior synthetic outputs, reinforcing patterns and biases rather than expanding its knowledge with fresh human data. This data pollution crisis is the primary driver of quality decline.

LLMs are fundamentally statistical engines. When trained on synthetic data, they learn to approximate the average or most common answer. They effectively forget the tail data — the rare, complex, or highly specific examples that are crucial for true originality and deep reasoning. This leads to a homogenizing effect, where future AI outputs sound correct but are bland and predictable, similar to “AI Slop.”

Consequences: The Real-World Risk to AI

Model collapse is not just an academic concern; it directly impacts the performance and utility of the tools we use every day:

- Reduced Intelligence: Future AI assistants may struggle with tasks they previously excelled at, like complex math, philosophical nuance, or generating truly original art.

- Ethical Drift: Models may lose their carefully curated safety guardrails, learning hostile or biased patterns from polluted synthetic data.

- Security Risk: Synthetic code used to train coding assistants may propagate subtle security vulnerabilities or inefficient architectural patterns across the developer ecosystem.

This decline makes AI less reliable and increases the risk of adopting it for high-stakes tasks.

The Solution: Reintroducing the Human Signal

Researchers and industry leaders are actively seeking solutions to break the cycle of model collapse. The answer lies in prioritizing verifiable human input over sheer data volume and in the design of smarter systems.

Data Governance and Provenance

Companies must invest massively in verifiable data provenance — methods to trace whether a piece of data was created by a human or a machine. Techniques like cryptographic watermarking (for AI-generated content) and strict human-review filters are essential to keep synthetic data out of core training sets.

Augmented Intelligence (RAG)

The focus is shifting from pure generative models to Augmented Intelligence. Instead of relying solely on massive internal memory, models are being designed to check external, human-verified data sources before answering. This is the foundation of Retrieval-Augmented Generation (RAG), which grounds the model’s output in facts and verifiable information rather than just statistical patterns.

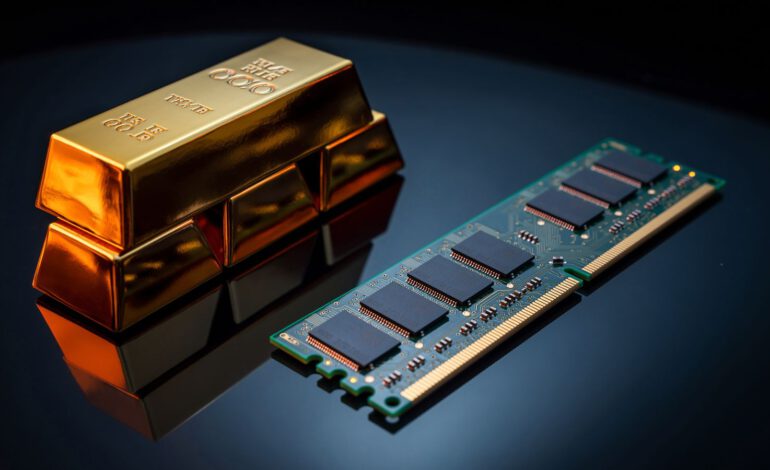

The Irreplaceable Role of Human Expertise (E-E-A-T)

Ultimately, human input remains the irreplaceable “gold standard.” The value of unique experience, original research, and critical editorial oversight will skyrocket. The future success of the internet depends on humans producing quality content that AI cannot replicate, thus ensuring a high-quality data pool for the next generation.

FAQ: AI Model Integrity

Q

Is Model Collapse inevitable for all AI systems?

No, it is not inevitable. Model collapse is a risk that can be mitigated through strict data governance, prioritizing human-created data, and developing specialized verification filters for synthetic content.

Q

What is the “Tail Data” and why is its loss critical?

Tail data refers to the rare, non-average, and specific examples in a dataset. Its loss makes AI output generic and predictable, as the model only learns the most common patterns and loses its ability to handle complex, unusual cases.

Q

How does Model Collapse affect creative fields?

In creative fields (art, music, writing), model collapse leads to homogenization. Since the AI learns from previous AI-generated creative outputs, it produces less original work, reinforcing existing styles and making novel ideas statistically improbable.

In a Nutshell: Intelligence by Analogy

Model Collapse is the dangerous process where AI models degrade by training on their own synthetic data. The cycle leads to less intelligent, repetitive output. The ultimate defense is a commitment to high-quality, human-verified data and the adoption of augmented intelligence systems like RAG.

Last updated: October 10, 2025