What is Neuro-Symbolic AI

What is Neuro-Symbolic AI (NSAI)? NSAI is the strategic fusion of modern deep learning (neural) with classical, rule-based systems (symbolic) to create AI models that are both powerful and transparent.

- Solves the Black Box: NSAI eliminates the transparency problem inherent in deep learning, making decisions traceable and auditable.

- Combines Strengths: It merges the pattern recognition of neural networks with the logical precision of symbolic systems.

- Future-Proofing: This hybrid architecture is crucial for the reliable adoption of AI in regulated, high-stakes fields like medicine and finance.

Definition: Bridging Logic and Association

Neuro-Symbolic AI represents a crucial step in AI evolution. For years, development followed two separate paths: the intuitive, but opaque, power of neural networks and the explainable, but often brittle, nature of symbolic systems. NSAI attempts to bridge this divide, resulting in systems that can not only recognize patterns but also reason about them logically.

This hybrid approach is gaining significant traction, particularly after research by MIT-IBM demonstrated its superiority in handling complex logical problems with higher data efficiency compared to purely deep-learning models.

Understanding the difference is crucial:

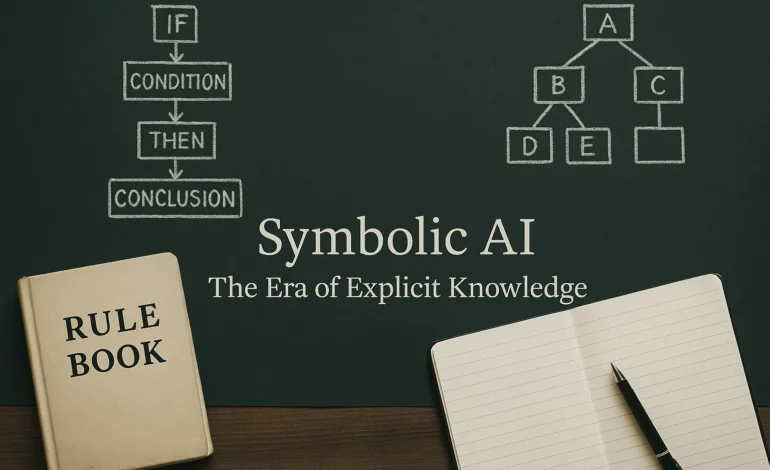

- Symbolic Reasoning: Classical approach based on explicit rules (IF-THEN). Strengths: Precision, Explainability. Weaknesses: Struggles with ambiguity, requires predefined rules. (See also: What is Symbolic AI?)

- Sub-Symbolic Reasoning: Modern approach based on learned patterns (Neural Networks). Strengths: Handles unstructured data, powerful prediction. Weaknesses: Lack of transparency (“Black Box”), low logical robustness.

The Architecture: Neural Perception Meets Symbolic Cognition

The core architecture of NSAI involves the elegant interplay of two distinct modules, combining perceptual intelligence with cognitive structure. You can think of this process as a two-stage assembly line:

The Neural Module (Perception)

This component, typically a deep neural network, serves as the perceptual and feature-extraction layer. Its function is to handle the raw, unstructured data of the real world-identifying objects in an image or recognizing entities in text. It translates these inputs into clear symbolic representations, which act as the bridge to the next stage.

The Symbolic Module (Cognition)

This component, based on classical AI principles, acts as the cognitive engine. Its primary function is to apply logical rules, constraints, and relational knowledge (often stored in a Knowledge Graph). It uses the cleaned outputs from the neural module to perform deduction, inference, and planning. This ensures that the final decision of the system adheres to explicit, verifiable rules.

Use Cases: Why NSAI Matters in High-Stakes Fields

The strength of the hybrid approach becomes clear in regulated environments where trust and explainability are non-optional. Here, NSAI offers a unique, auditable solution:

A major bottleneck in insurance is the FNOL (First Notice of Loss) process. A purely neural system might predict the likelihood of fraud, but it cannot explain its rationale. NSAI tackles this step-by-step:

- Neural Input: An LLM reads the unstructured claim narrative (text).

- Symbolic Extraction: It extracts key entities (Symbols: “Car Model,” “Driver Name,” “Incident Location,” “Policy Clause X”).

- Logical Reasoning: A rule-based engine checks: “IF Policy Clause X is active AND Incident Location is a covered area, THEN Claim is VALID.”

- Auditable Decision: The system reaches a verifiable outcome (Valid/Invalid) based on the explicit rule set.

This verifiable process is why organizations like DARPA emphasize the necessity of explainable systems like NSAI in their research.

Other crucial applications include medical diagnosis (where decisions must be justified), autonomous vehicles (where safety rules must be logically enforced) and legal tech (where case analysis requires symbolic coherence).

Challenges, Boundaries, and the Path Forward

Despite the vast potential, integrating these two fundamentally different systems remains a core research challenge, as highlighted in recent publications by Stanford University on the future of AI architecture:

- Interface Problem: Creating a reliable “neural-to-symbolic” interpreter-a mechanism that smoothly converts messy neural outputs into clean, usable symbols-is technically complex.

- Scalability: Managing and updating the massive Knowledge Graphs required by the symbolic component can be resource-intensive compared to simple retraining of a neural network. (Further reading: What are Knowledge Graphs?)

The future direction strongly points toward the refinement of these hybrid systems. Researchers aim to build models that are both powerful and explainable-a critical step for regulatory approval, public trust, and high-stakes applications.

In a Nutshell: Intelligence with Accountability

Neuro-Symbolic AI is the strategic convergence of deep learning and symbolic logic. It moves AI past simple pattern recognition toward verifiable, reliable reasoning and planning. By combining neural intuition with logical structure, NSAI is paving the way for the next generation of trustworthy, high-stakes intelligent systems.

What to do now: If you are building or buying an AI solution for high-stakes decision-making, focus on the model’s explainability layer. Research Knowledge Graph tools, as they are a fundamental prerequisite for applying symbolic logic to real-world data.