OpenAI Cuts GPT-4.1 Prices to Stay Ahead in the AI Race

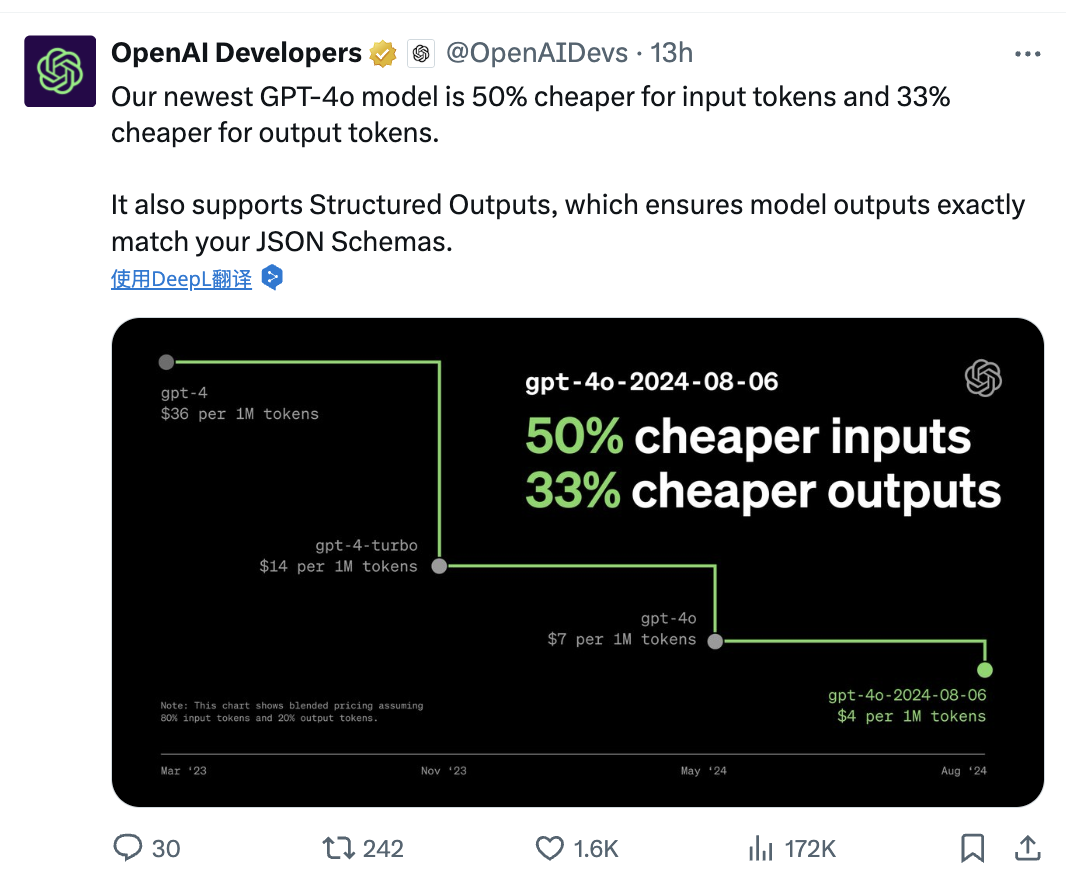

New models, lower costs — OpenAI is moving fast to stay competitive. In a market flooded with emerging AI startups and aggressive pricing from rivals like Anthropic and Mistral, OpenAI is making a bold move. The company has officially announced significant price reductions for its latest generation of models, including GPT-4.1 and GPT-4.1 Mini.

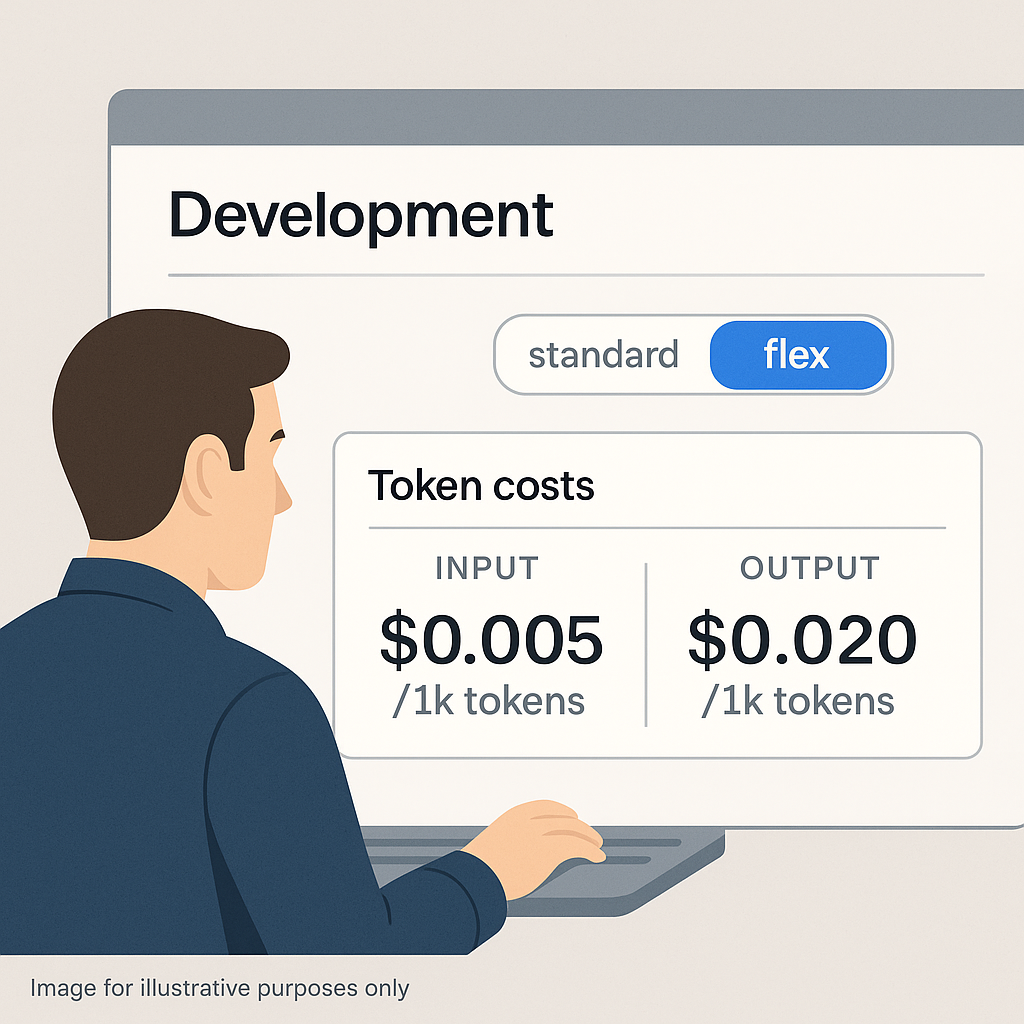

Introducing Flex Processing: 50% Off for Non-Urgent Tasks

OpenAI has also introduced Flex Processing, a beta feature that offers a 50% discount on API costs for the o3 and o4-mini models. This option is ideal for non-urgent tasks, as it trades off processing speed for cost savings. Pricing details:- o3 model: $5 per million input tokens (down from $10), $20 per million output tokens (down from $40)

- o4-mini model: $0.55 per million input tokens (down from $1.10), $2.20 per million output tokens (down from $4.40)

service_tier parameter to "flex" in their API requests, users can access these discounted rates.

It’s important to note that Flex Processing is currently in beta and may experience slower response times or occasional resource unavailability. Additionally, access to the o3 model under Flex Processing may require identity verification for users in lower usage tiers.

Smaller Models, Broader Reach

GPT-4.1 and its variants offer more than just lower prices. The architecture has been optimized to handle longer context windows—up to 1 million tokens—opening new possibilities in document analysis, code generation, and enterprise-scale data processing. This improvement is particularly impactful for industries like law, research, and finance, where large datasets are the norm. OpenAI is also emphasizing accessibility: by offering different model sizes—Mini, Base, and Nano—the company aims to meet the demands of small developers as well as large-scale enterprise clients. In a blog post, OpenAI described this evolution as “the start of a new phase where intelligence meets efficiency at every scale.” Major clients like Carlyle and Thomson Reuters have already reported improved performance and cost-efficiency using the new versions, particularly GPT-4.1 Mini, which performs well even under resource constraints.Staying Competitive in a Changing Market

The AI landscape in 2025 is markedly different from just a year ago. Google, Meta, and open-source consortia are pushing hard to lower AI costs while boosting speed and flexibility. OpenAI’s price cuts are clearly a response to this pressure—and a message to developers: you don’t have to choose between affordability and quality. Whether these changes will be enough to fend off rising competitors remains to be seen. But for now, OpenAI has reasserted its position not just as a tech leader, but as a practical partner for anyone building the future of AI.

Christoph

Explores the future of AI

Sharing insights, tools, and resources to help others understand and embrace intelligent technology in everyday life.