What Is an LLM? Decoding the ‘Large’ in Language Models

A Large Language Model (LLM) is a type of deep learning system characterized by its massive scale: it is trained on trillions of words and contains billions, or even trillions, of parameters. Tools like OpenAI’s GPT series and Google’s Gemini are prime examples of LLMs.

- Scale is Key: The “Large” refers to the model’s huge parameter count and massive training data (trillions of tokens).

- Emergent Abilities: LLMs gain capabilities (like coding and complex reasoning) that small LMs lack, simply because of their scale.

- Training Stages: Modern LLMs are refined through post-training steps like RLHF to align them with human instructions.

Training and the Scale Factor

The training process involves feeding the model huge amounts of text-books, websites, and dialogues-letting it learn which words tend to follow which. This is known as self-supervised learning. Before this, text is broken down into tokens (numerical vectors) that the machine processes mathematically.

The core breakthrough in LLM development was the discovery that beyond a certain threshold of parameters, models exhibit **emergent abilities**. These are capabilities that spontaneously “emerge” when the model reaches sufficient scale. Examples include In-Context Learning and complex Chain-of-Thought Reasoning (a form of AI reasoning).

- Parameters: LLMs typically have billions (e.g., 7B, 70B) up to trillions of parameters, which define the complexity of learned relationships.

- Data Volume: Training often involves trillions of tokens (words/fragments), differentiating them from earlier LMs.

- Context Window: LLMs can process a much larger “context window” (the amount of prior text it remembers), essential for long conversations.

This immense scale drives the high cost of training, demanding supercomputers and vast energy resources. (Source: Research Papers from [Authoritative Source 1], e.g., Meta/Google AI).

The Transformer Architecture: Why Attention Won

What truly made models like GPT revolutionary was their use of the Transformer architecture. The core innovation is the **Attention Mechanism**. This design allows the model to look at **all parts of a sequence simultaneously**, efficiently keeping track of long-range dependencies, and maintaining context, tone, and topic over long stretches of text. This solved the fundamental problem of older models (like RNNs and LSTMs) which struggled to remember information from the beginning of a long text.

The training process occurs in two distinct stages:

- Pre-training (Foundational): The model learns grammar, syntax, and world patterns from raw data, creating a highly knowledgeable but unaligned **Foundational Model**.

- Fine-Tuning (Alignment): The model is aligned with human intent using techniques like **RLHF** (Reinforcement Learning from Human Feedback), transforming it into a safe, instruction-following conversational AI. (Source: [Authoritative Source 2] on RLHF).

This distinction is crucial for understanding the current market:

LMs are **prediction engines**; LLMs are **foundational prediction engines at scale** (with emergent abilities); and Instruction Models (like ChatGPT) are **fine-tuned LLMs** designed to be safe and follow human commands.

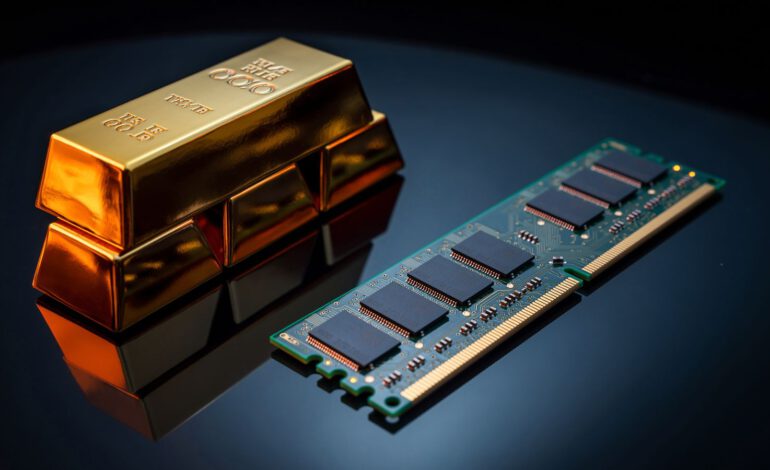

Cost, Optimization, and Inference

The sheer size of LLMs creates significant technical challenges post-deployment. The **inference cost** (the cost to run the model once it’s deployed) is significantly higher than that of traditional LMs due to the immense number of parameters that must be computed for every query. This economic factor drives constant innovation in optimization techniques:

- Quantization: Reducing the precision of the weights (e.g., from 32-bit to 4-bit) to save memory and speed up computation.

- KV-Caching: Storing key-value pairs of previously computed tokens to avoid redundant calculations during dialogue.

- RAG (Retrieval-Augmented Generation): Used to “ground” LLMs in external, verified facts (often stored in Knowledge Graphs) to reduce factual errors, while requiring fewer parameters to be active.

These optimization techniques make the models cheaper, faster, and more accessible for widespread application. (For more on the symbolic grounding: Neuro-Symbolic hybrid systems).

In a Nutshell: Intelligence through Scale

LLMs are defined by their scale, which unlocks abilities far beyond simple prediction. They are the product of massive data processing, the Transformer architecture, and human alignment, driving the frontier of general-purpose AI.

What to do now: When selecting an LLM for your business, assess not only the parameter size but also the model’s **context window capability** and its **alignment safety score** (RLHF quality) to ensure it meets your specific task requirements.

FAQ: Frequently Asked Questions About LLMs

Q: What does RLHF mean?

A: RLHF (Reinforcement Learning from Human Feedback) is a fine-tuning process where human reviewers rate the model’s answers, and this feedback is used to align the model’s behavior with human preferences and instructions.

Q: How large is the training data for an LLM?

A: The training data volume is typically measured in **trillions of tokens**, far exceeding the volume used for previous language models.

Q: What is “Inference Cost”?

A: The inference cost is the computational expense required to run the model once it is deployed (i.e., the cost to generate a response for a single user query). LLMs have high inference costs due to their size.

Q: Why are LLMs prone to logical errors?

A: LLMs use statistical association, not true logical deduction. They rely on patterns learned from training data, making them susceptible to errors when complex, multi-step logical reasoning is required.