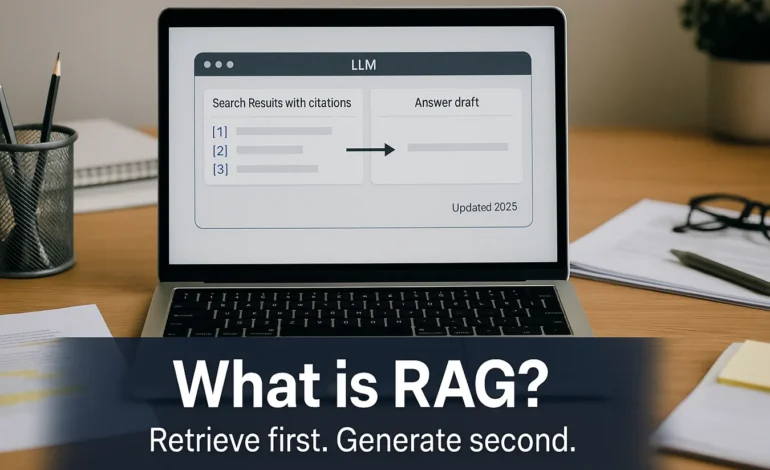

What is RAG? (Retrieval-Augmented Generation Explained)

Retrieval Augmented Generation (RAG) is the key technology that allows today’s massive language models (LLMs) to move past their static training data and interact with the real world. It is the critical step that transforms a brilliant but sometimes unreliable storyteller into a well informed researcher.

- Method: RAG lets the model consult verified external sources before answering so responses are grounded in evidence.

- Hallucinations: Using cited passages reduces factual mistakes and inconsistencies on knowledge seeking questions.

- Freshness: External context adds up to the minute facts beyond the model’s training cutoff.

- Auditing: Answers can be traced directly back to the original source documents.

Why RAG Matters for Enterprise AI

Retrieval Augmented Generation is now widely used in enterprise AI. It addresses two common weaknesses in large language models: hallucination and knowledge cutoff. Relying only on static training data leads to fluent but fabricated statements when the model guesses outside its remembered facts.

Because the model’s knowledge stops at its last training date it struggles with time sensitive analysis. RAG adds a lookup step and prioritizes external verification. The model keeps its strength in fluent writing while anchoring claims in sources so it behaves more like a careful researcher than a storyteller.

RAG Methodology: The Two Step Process

RAG combines the power of information retrieval with the generative capability of an LLM. This two step process ensures the output is grounded:

The system receives the question and searches a curated store such as an internal wiki a document index or a Knowledge Graph. It selects a small set of highly relevant passages and trims them to fit the context window. The goal is concise high signal snippets rather than long blocks of text.

Step 2: Augmented GenerationThe passages and the question are given to the LLM with clear instructions to rely primarily on the provided evidence. Good setups ask for quotes and citations and require the answer to admit “not found” if the snippets do not contain the required fact. This approach prioritizes cited evidence over pattern based guesses.

Good practice: Quality depends heavily on the corpus and the retriever system. To maximize performance organizations must keep sources clean and current log citations for every claim add reranking to weed out near duplicates and define a fallback so the assistant returns “not found” instead of inventing an answer.

Real World Applications of RAG

RAG’s ability to provide current and verifiable information makes it indispensable across critical business functions:

- Enterprise Search: Employees ask questions over proprietary documents and receive synthesized answers with citations. Teams report up to 50 percent faster answer retrieval in internal trials.

- Customer Service: Assistants use the latest policies and product details which lowers escalations by about 15 to 20 percent in pilot programs.

- Financial Research: Analysts query recent filings and reports so summaries reflect current disclosures rather than last year’s memory.

- Legal Compliance: Lawyers use RAG to query legal databases ensuring advice is based on the most recent statutes and case law.

This grounding in facts transforms AI from an experimental tool into a reliable business asset.

In a nutshell

RAG retrieves first and writes second. It does not make models perfect but it makes them auditable current and far more reliable for real work by anchoring generative output in verified external facts.

Frequently Asked Questions About RAG

Q What is the main difference between RAG and fine tuning?

Fine tuning changes the model’s weights and behavior and typically requires new training runs. RAG keeps the model unchanged and adds external context at query time for faster cheaper updates.

Q Does RAG remove hallucinations?

No. It reduces them by prioritizing cited passages and allowing a “not found” response when evidence is missing but the model can still misread or misapply snippets.

Q Where do the facts come from?

From verified sources such as vector databases relational databases or knowledge graphs. Retrieval quality and corpus curation directly determine answer quality.

Q What is the biggest challenge?

Corpus governance. Keep sources clean and current define refresh schedules and allowlists log citations and add reranking to boost passage relevance.

Understand the foundational AI models: What is an LLM?

Explore RAG’s structured data requirement: What Are Knowledge Graphs?