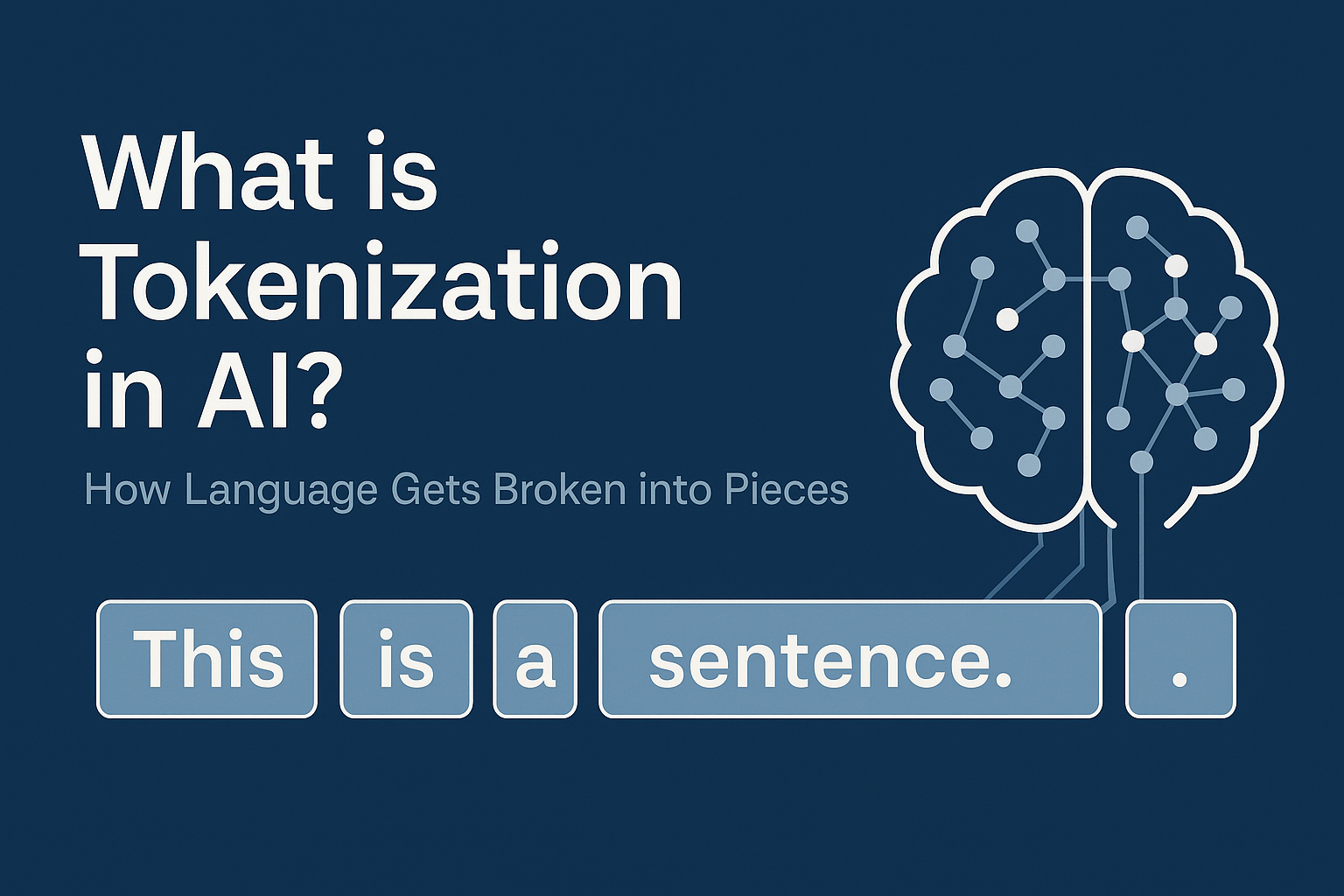

What is Tokenization in AI?

Tokenization is one of the most fundamental steps when machines process human language. Before an AI like ChatGPT can generate a response, it first needs to break down your input into smaller, manageable pieces called tokens. These tokens can represent words, parts of