What is Tokenization in AI?

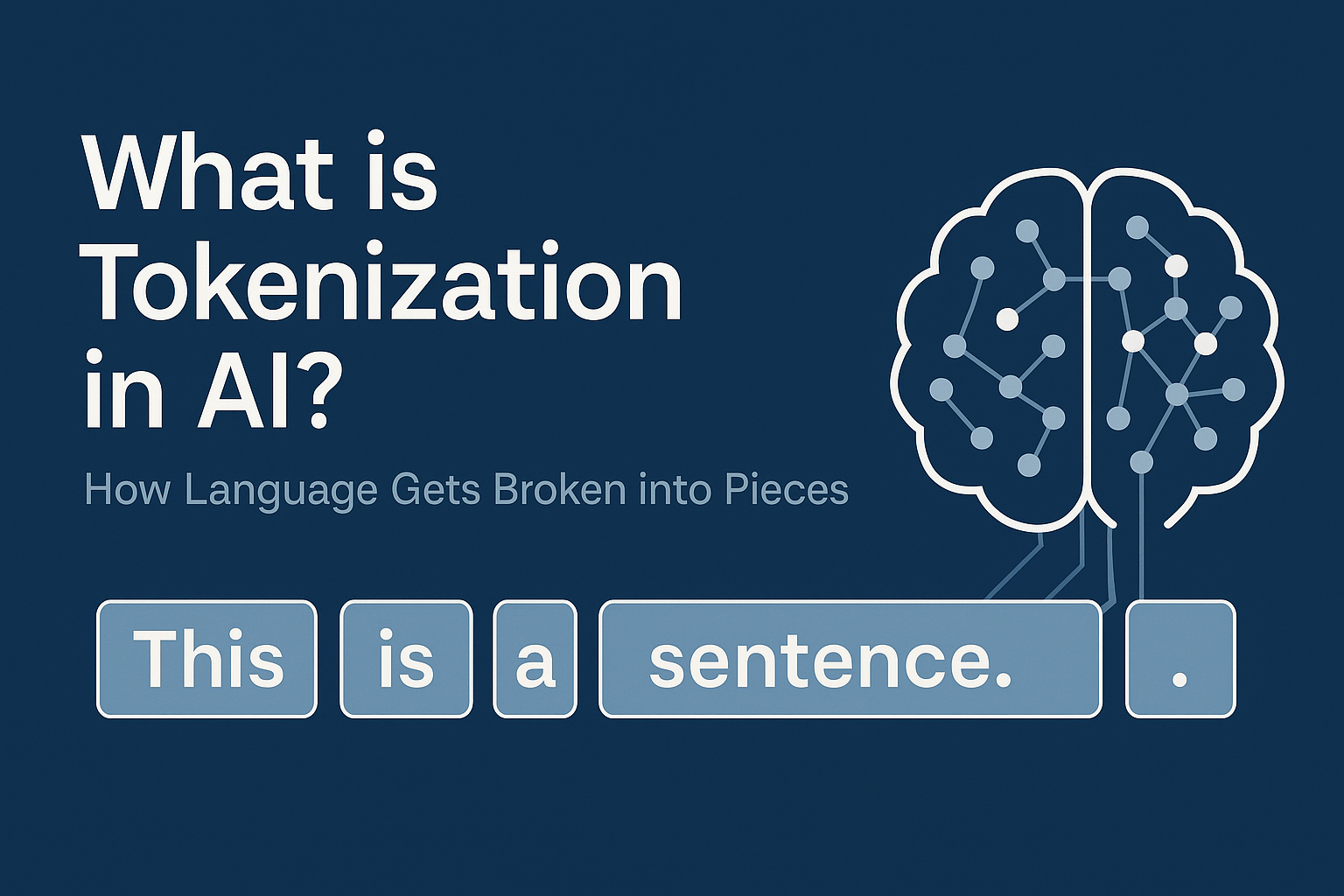

Tokenization is one of the most fundamental steps when machines process human language. Before an AI like ChatGPT can generate a response, it first needs to break down your input into smaller, manageable pieces called tokens. These tokens can represent words, parts of words, or even individual characters, depending on the model’s design.

Instead of working with full sentences or raw text, AI models transform language into a series of vectors—numerical representations that computers can understand and manipulate. Tokenization makes it possible to efficiently analyze sentence structure, detect meaning, and manage the size of inputs and outputs during interactions with the model.

Different AI systems use different tokenization strategies. Some models split inputs at the word level, treating each full word as a single token. Others go deeper, breaking words into subword units like prefixes and suffixes. For example, the word “unbelievable” might be tokenized into “un,” “believ,” and “able.” Some models even tokenize character by character, depending on their architecture and application needs.

Understanding how tokenization works can help explain why AI responses sometimes seem clipped or incomplete. Models like GPT-4 can process up to 128,000 tokens at once in some versions—including both your input and the AI’s output. This limit means that long conversations or detailed prompts can eventually run into space constraints, even if they still feel manageable to a human reader.

In a simple example, if you type the sentence “Learning is powerful,” a tokenizer might split it into tokens like [“Learning”, “is”, “power”, “ful”, “.”]. Each of these pieces is then represented mathematically inside the AI system to guide the generation of the next response.

🔎 In a Nutshell

Tokenization is the hidden process that transforms language into a machine-readable format. By slicing text into manageable parts, AI systems can better understand and generate responses—one token at a time.

📚 For more foundational terms and concepts, check out our full AI Glossary.